Next: Tutorial

Up: TUTTI Reference

Previous: TUTTI Reference

Contents

Index

Subsections

What is TUTTI

TUTTI offers a unified test framework using various test tools. It

introduces a test infrastructure for an organization producing

software. Features of TUTTI are:

- User friendliness by means of a simple, uniform and intuitive user interface to (existing) test tools.

- Tests are well organized

- Tests can easily be automated

- Legible test results can easily be generated

- Easy to integrate with Configuration Management and a

development environment

Ideas behind TUTTI

A unit test focuses on testing a piece of software. In our context, a

unit is

a library or a daemon process that is part of our software. Unit testing is a

verification method that is used to get confidence about the quality of the

software. There are five ideas that play a key role in unit testing.

- The aim of any testing activity is finding defects in software.

- When no defects were found after thorough testing, we have confidence in

the quality of the software.

- Testing is not always the best verification method. This depends on the risks and the effort that would be needed to do enough testing.

- Quality of software may still be poor, despite it's proved functional

behavior. Non functional behavior of the software is also relevant.

- Both white-box and black-box tests are important for unit

testing.

- The earlier a defect has been found, the less costs are needed in order

to fix it [6].

Developing unit tests

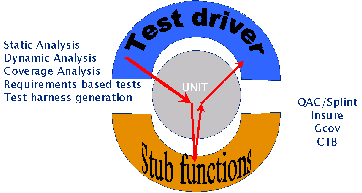

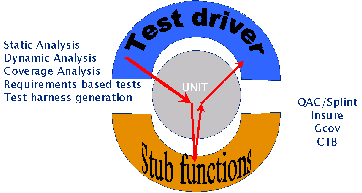

Figure 6.1:

A unit is often tested in a simulated environment (stubs). A driver is needed to invoke the functions defined in the unit and to check the outcome.

|

Several test techniques are relevant when developing unit tests.

Firstly, the maintainability, correctness and robustness of

the software must be assured by static analysis. Static analysis is a test technique that enables

a tester/programmer to analyze thew software in a static way. This

means, none of the code need to be exercised. A great benefit of the

static analysis is that the code need not be complete in order to be

tested and about 40% of possible software defects can be located this

way[7]. Therefore, a static analysis must be carried out as

the first test.

Of course, it is also important to exercise the code by

so-called dynamic testing (i.e. testing

dynamic behavior of the code by executing it). The design of a dynamic

test is the most important step for this part. The design is based upon

two approaches:

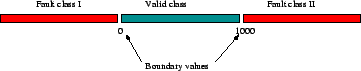

- Black-box test design.

Test cases are derived from the requirements

by using formal test design techniques such as boundary value

analysis, cause effect graphing or equivalence class partitioning[5]. For example, when the user

can input the lifetime of a certain hash value between 0 and 1000 ms, this boils

down to:

- Try to find out an element for input representing a type of input

class (-10, 10, 2000 ).

- Try to find the inputs that focus on the boundaries of the

requirement ( 0, 1000, 1001).

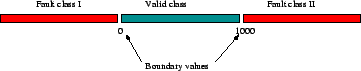

Figure 6.2:

Selecting inputs for a life time of a hash value using

equivalence-class-partitioning and boundary value analysis design techniques.

|

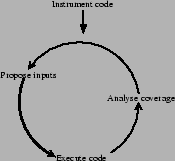

- White-box test design.

Test

cases are derived from the software itself using a reverse-engineering

approach. Often coverage analysis is used

for this purpose. This means that it is analyzed how many (and which) of

the statements in the software are actually exercised by the test. Using

the results of this coverage analysis, the inputs are adapted an the

code is executed with the new inputs. This iterative process results

in a set of inputs that will cause enough code to be executed by the

test. After generating test inputs this way, checks are inserted in the

code that verify the supposed effect or result of that specific input.

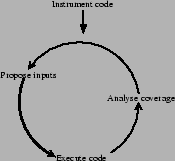

Figure 6.3:

Design of a unit test using a white-box approach. Test inputs are

derived iteratively from the outcome of the coverage

analysis.

|

Common problems with unit testing

Test tools used in a development environment have at least two

drawbacks. A major drawback often experienced is the lack of clearness of

the resulting output. Often, a user of a certain test tool finds himself

wasting time by examining output from a test tool that is difficult

to comprehend. Many times, test output is blurred by irrelevant (non)

information or problems are issued which are doubtful or for which their

is no consensus.

An example of this is the analysis of a program file test.c with lclint or splint as a static

analysis tool.

#include <stdio.h>

int main ( void )

{

int p;

printf("hello %i.n", p );

return 0;

}

The output of splint is directed to the stderr device:

test.c: (in function main)

test.c:6:26: Variable p used before definition

An rvalue is used that may not be initialized to a value on some execution

path. (Use -usedef to inhibit warning)

test.c:6:5: Called procedure printf may access file system state, but globals

list does not include globals fileSystem

A called function uses internal state, but the globals list for the function

being checked does not include internalState (Use -internalglobs to inhibit

warning)

test.c:6:5: Undocumented modification of file system state possible from call

to printf: printf("hello %i\n", p)

report undocumented file system modifications (applies to unspecified

functions if modnomods is set) (Use -modfilesys to inhibit warning)

Finished checking --- 3 code warnings

As shown by this example, a developer can have a hard time analyzing

this output. This is output from only 8 lines of code. Only one of these

lines (the second line) contains probably useful information.

Different tools, need different options. Often tools are just difficult

to use. Also the most easiest way of using a particular tool is not

the best way. For these reasons, it is recommended to create wrappers

around the test tools that provide a uniform interface to the tools and

to the outcome of the test tools. Together with legible output, it must

be clear whether the test failed or was successful without having to

read more than two lines of output.

Bubble provides an interface for the test tools insure, QAC, splint,

gcov and other tools. The user interface is similar to an ordinary

compiler.

By doing so, the Makefile need not be adapted to support

any of these tools. The tools that either instrument or analyze

the source code can be invoked as a compiler wrapper with the name

toolname

toolname _compiler. The output of the analysis or execution of the

instrumented code is analyzed with a tool named

_compiler. The output of the analysis or execution of the

instrumented code is analyzed with a tool named  toolname

toolname _scan. The

next example, shows how the previous file is analyzed with splint and

QAC.

_scan. The

next example, shows how the previous file is analyzed with splint and

QAC.

qac_compiler -c -g -I /usr/local/include test.c

This results in a file test.qac that is analyzed by the tool

qac_scan:

qac_scan

..

Next, we analyze the code with splint:

splint_compiler -c -g -I /usr/local/include test.c

This results in a file test.lint which can be analyzed with the tool

splintscan:

splint_scan

...

The analysis tools issue their results in compiler error format by

default. HTML code is generated by specifying -html as an option. E.g.:

qac_scan -html

Next: Tutorial

Up: TUTTI Reference

Previous: TUTTI Reference

Contents

Index

2004-05-28